- DevOps - Home

- DevOps - Traditional SDLC

- DevOps - History

- DevOps - Architecture

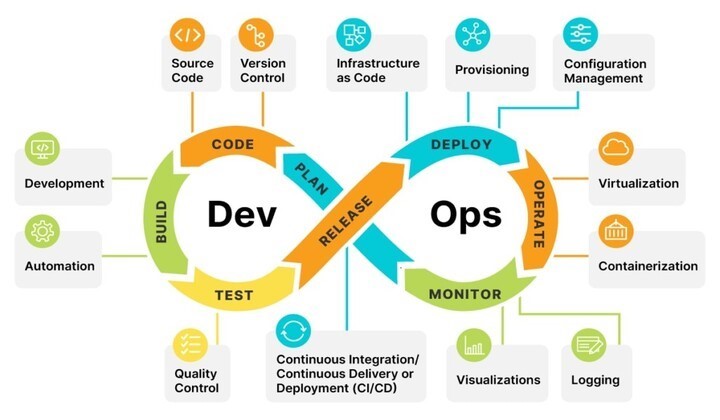

- DevOps - Lifecycle

- DevOps - Tools

- DevOps - Automation

- DevOps - Workflow

- DevOps - Pipeline

- DevOps - Benefits

- DevOps - Use Cases

- DevOps - Stakeholders

- DevOps - Certifications

- DevOps - Essential Skills

- DevOps - Job Opportunities

- DevOps - Agile

- DevOps - Lean Principles

- DevOps - AWS Solutions

- DevOps - Azure Solutions

- DevOps Lifecycle

- DevOps - Continuous Development

- DevOps - Continuous Integration

- DevOps - Continuous Testing

- DevOps - Continue Delivery

- DevOps - Continuous Deployment

- DevOps - Continuous Monitoring

- DevOps - Continuous Improvement

- DevOps Infrastructure

- DevOps - Infrastructure

- DevOps - Git

- DevOps - Docker

- DevOps - Selenium

- DevOps - Jenkins

- DevOps - Puppet

- DevOps - Ansible

- DevOps - Kubernetes

- DevOps - Jira

- DevOps - ELK

- DevOps - Terraform

DevOps - Quick Guide

DevOps - Traditional SDLC

Today, we see that companies are always trying to be more efficient. They want teams to work together better and release software faster. This is where DevOps comes in. It connects the software development and operations teams so they can do things like continuous integration and delivery. DevOps is not just about using tools, it's more like a way of working. It helps everyone share responsibility and work together.

But before DevOps, we used the Traditional Software Development Lifecycle (SDLC) for building software. In traditional SDLC, we follow a step-by-step process. First, we gather requirements, then we design, develop, test, and finally deploy and maintain the software. This process works in some cases, but it can be slow and not flexible enough for today’s fast-paced needs.

In this chapter, we will look at why the old SDLC method has problems. Then we will see how DevOps solves these issues. It gives us a more flexible and team-based way to develop software. We will also compare traditional SDLC with DevOps to understand why more teams are choosing DevOps today.

Traditional SDLC Phases

The Traditional Software Development Lifecycle (SDLC) uses a step-by-step process. It's also called the "waterfall model." Each phase happens one after the other. We can't start the next phase until we finish the previous one. The following image shows a simple breakdown of the key phases in traditional SDLC −

Requirements Gathering and Analysis

In this first phase, we focus on understanding what the project needs. Business analysts, stakeholders, and customers work together to collect all the details about what the software must do. The goal is to make sure everyone understands what the system should achieve.

We document all these needs in a Software Requirements Specification (SRS). This document is very important because it helps guide the rest of the project. If we miss something or misunderstand the requirements, it can cause big problems later.

Design Phase

After we know what we need, we move to the Design phase. Here, software architects and designers create the plan for the software. This includes how different parts of the system will work together (high-level design) and the details of each part (low-level design).

We also decide on database structure, user interface (UI), and which tech stack we will use. The result of this phase is a design document. Developers use this document as a guide when they start building the software.

Development Phase

Next, we enter the Development phase. Here, developers write the code based on the design document. They create different parts or modules of the software. This phase takes the most time because developers need to write, debug, and test the code.

Usually, different people or teams work on different parts. Sometimes, when these parts come together, there can be delays.

Testing Phase

After development, we start the Testing phase. The quality assurance (QA) team checks the software to find bugs or errors. They also make sure the software matches the original requirements. We run many types of tests like unit testing, integration testing, system testing, and user acceptance testing (UAT).

The testing phase is important for ensuring the product works well. In traditional SDLC, we do this after building the whole system, which makes fixing problems harder and slower.

Deployment Phase

When the software passes all tests, it is ready for the Deployment phase. We move the system to the production environment where users can start using it. In traditional SDLC, this often involves manual steps, which can cause delays or mistakes, especially for big systems. Once deployed, we keep an eye on the software to make sure it works as expected.

Maintenance Phase

After deployment, we enter the Maintenance phase. This involves fixing any bugs, making updates, and adding new features if needed. Maintenance can be of three types:

- Corrective − Fixing bugs

- Adaptive − Adjusting to changes in environment

- Perfective − Improving or optimizing the system

The traditional SDLC often finds this phase hard. It can be slow and costly to make changes because of the rigid, step-by-step nature of the process.

Challenges in Traditional SDLC

While Traditional Software Development Lifecycle (SDLC) worked well for many projects, it has some problems in today's fast-moving world. These problems mostly come from its stepbystep process and the lack of teamwork between development and operations teams.

Let's look at the main challenges in the traditional SDLC:

Siloed Teams

Development, testing, and operations teams do not work together. There is no clear communication between them. When an issue needs more than one team, it causes delays and slows the work.

Long Development Cycles

The step-by-step process makes projects take longer. We must finish one phase before we start the next. It takes time to respond to changes or new needs because feedback comes late.

Manual Processes

Testing, deploying, and even some development tasks are done by hand, which implies more human mistakes. Doing things manually slows down the project and makes updates less frequent.

Frequent Errors

We find bugs late because we don't integrate and test early. Fixing these problems late in the project takes time and costs more. Development teams don't get fast feedback from the testing stage.

Difficulty in Adapting to Change

The step-by-step process makes it hard to add new features or change things once we start development. If customer needs or market trends change, we might have to start the process again. This leads to delays and extra costs.

Due to these challenges, many companies now prefer DevOps. DevOps is faster, more flexible, and helps teams work together better.

How DevOps Solves the Challenges of Traditional SDLC?

DevOps helps fix many problems that we see in the traditional SDLC. It focuses more on teamwork, automating tasks, and delivering updates regularly. Here’s how DevOps solves the common problems we face with the old SDLC method:

DevOps Breaks Down Silos

DevOps brings together development, operations, and QA teams. Teams work together from the start. This means, better communication and shared work. We see fewer delays, and problems get fixed faster.

Enables Shorter Development Cycles

DevOps uses continuous integration (CI) and continuous delivery (CD). This helps us build and test features more often. Updates are delivered in smaller, more frequent parts. It gives us faster feedback and lets us release new versions more quickly.

Automates Processes

Automation is key in DevOps. It covers testing, deployment, and even managing infrastructure. We use automated tests and pipelines, so we don't have to do many things by hand. This makes fewer mistakes and speeds up work.

With tools like Infrastructure as Code (IaC), we can set up and manage infrastructure automatically. It helps us grow and maintain systems easily.

Reduces Errors

With automated testing and continuous integration, we catch problems early. Because we keep testing and integrating code often, bugs don't stay around for long. We fix them before they become big issues.

We also use monitoring tools to keep an eye on the system, which helps us fix problems before users notice them.

Adapts to Change Easily

DevOps is flexible. It helps us adjust quickly when new requirements or customer needs pop up. With continuous delivery, we can add small changes, test them, and release them fast. We don't need to restart the whole process. This makes it easier to stay updated with market trends and customer feedback.

In short, DevOps changes how we develop software. It focuses on automation, teamwork, and constant improvement. This helps us finish projects faster, make fewer mistakes, and adjust to changes better.

Traditional SDLC vs DevOps

The following table highlights how Traditional SDLC differs from DevOps −

| Aspect | Traditional SDLC | DevOps |

|---|---|---|

| Team Structure | Siloed teams (development, QA, operations work separately). | Cross-functional teams work together collaboratively. |

| Development Cycle | Sequential (waterfall) model; long development cycles. | Continuous development and delivery in small, frequent increments. |

| Testing Approach | Testing occurs at the end of the development phase. | Continuous testing throughout the development process (CI/CD). |

| Automation | Limited automation, with a focus on manual processes. | Heavy emphasis on automation for testing, deployment, and infrastructure. |

| Feedback Loops | Slow feedback; issues are identified late in the cycle. | Fast feedback loops through continuous integration and monitoring. |

| Deployment Frequency | Infrequent, often large, batch releases. | Frequent, small, and incremental releases. |

| Adaptability to Change | Rigid and less adaptable to changes once the process has started. | Agile and easily adaptable to changing requirements or market trends. |

| Error Detection | Errors are often detected late, making them costly to fix. | Early error detection through continuous integration and automated testing. |

| Collaboration | Teams operate independently with minimal collaboration. | Development, QA, and operations collaborate closely from start to finish. |

| Infrastructure Management | Manual provisioning and management of infrastructure. | Infrastructure as Code (IaC) automates infrastructure provisioning and management. |

| Release Time | Longer release times due to extensive manual testing and deployment. | Faster release times with automated pipelines and continuous deployment. |

| Responsibility | Separate responsibilities for development and operations. | Shared responsibility among all teams for development, testing, and operations. |

This comparison highlights how DevOps overcomes the limitations of traditional SDLC by encouraging collaboration, automation, and flexibility, leading to faster, more efficient software delivery.

Conclusion

In this chapter, we looked at the traditional Software Development Lifecycle (SDLC). We highlighted the problems it has. Then we explored how DevOps helps fix these issues.

The move from a strict process to a flexible way of working makes things better. It helps us catch errors early and test more often. This way, we can improve product quality. In the end, using DevOps changes software development. It makes the process faster and more responsive. This leads to better results in our fast-changing tech world.

DevOps - History

DevOps aims to make software delivery and operations better and faster. In this chapter, we will look at the history of DevOps. We will see how it started from early software development practices to become a key part of modern IT and software engineering.

We will look at the different phases that helped shape DevOps. We will talk about how DevOps practices began, the important milestones in its history, and how these changes have changed the way organizations deliver software.

Historical Context

Software development has changed a lot over time. At first, we used traditional SDLC which gave a step-by-step way to develop software. It worked well when projects were simple and predictable.

But as software got more complex, this old model didn't work so well. We needed faster results, better teamwork, and flexibility. So, new ways like Agile and later DevOps came to help solve these problems.

The Rise of Agile Development

In the early 2000s, the Agile Manifesto changed how we think about software development. Agile brought ideas that focus on being flexible, working closely with the customer, and making progress bit by bit.

Unlike traditional SDLC, Agile methods like Scrum and Kanban let teams get feedback and make improvements during the project, not after. This helped us adjust to changes quickly. Agile also helped prepare the way for DevOps, which improved teamwork and automation even more.

The Growing Complexity of Software Systems

As businesses started to depend more on software, things got a lot more complex. We had to deal with cloud computing, microservices, and distributed systems. This made us think differently about how we develop, deploy, and manage software.

Software now has many connected parts that need frequent updates. Scaling apps to handle more users became difficult with old methods.

There was a bigger need for quick delivery of new features for mobile and web apps. Competition worldwide made us deliver faster and more reliable software.

The Challenges of Traditional SDLC

Traditional SDLC worked well when projects were small and simple. But it had trouble keeping up with modern software development needs.

- Not flexible − Once development starts, it's hard to make changes.

- Slow feedback − It takes a long time to catch problems, because testing happens much later.

- Siloed teams − Developers, testers, and operations don't talk to each other enough, which causes communication problems.

- Manual work − Things like testing, deployment, and managing infrastructure are done manually, leading to more mistakes.

- Difficult to handle changes − When requirements change, it's costly and sometimes needs us to start projects over.

These problems showed us that we needed methods that are more flexible, promote better teamwork, and use more automation. This led to the rise of DevOps.

The Emergence of DevOps

In the late 2000s, we saw the rise of DevOps. It came because we needed to deliver software faster and better. There was a big gap between the development and operations teams. This often slowed down projects in the traditional SDLC.

With DevOps, we tried to bring these teams together. We used more automation and focused on continuous delivery. The main idea is to combine development, testing, and operations into one smooth process. This helped speed up software delivery and still keep quality high.

Early Adoptions of DevOps Practices

When DevOps started growing, many companies saw big improvements. Big names like Google, Netflix, and Amazon were quick to adopt DevOps. They used automation and CI pipelines to make their development faster.

We used automation to handle boring tasks like testing and deployment. This made things faster and with fewer mistakes. With Infrastructure as Code (IaC), teams could manage and set up infrastructure using code. It made scaling easier and environments more consistent.

CI/CD pipelines helped companies release updates more often and with more confidence. We also focused on monitoring and getting quick feedback. This helped us find and fix problems in production faster.

The Influence of DevOps Thought Leaders

DevOps also grew fast thanks to DevOps thought leaders. They shared their ideas through books, talks, and by leading the community.

- Gene Kim, who co-wrote The Phoenix Project and The DevOps Handbook, made DevOps popular. He told stories and did research to show how useful DevOps was for IT teams.

- Jez Humble, co-author of Continuous Delivery, helped many companies understand why CI/CD was important and how to use it.

- Patrick Debois is often called the person who came up with the term "DevOps." He helped organize early DevOps events and started DevOps Days.

- Nicole Forsgren, known for her work on the State of DevOps Report, gave us helpful data on how DevOps improves business performance.

These leaders shaped DevOps and gave us the best practices we still use today.

The Adoption of DevOps by Major Tech Companies

When big tech companies adopted DevOps, it showed how well it works on a large scale. Companies like Google, Amazon, and Netflix were some of the first to use DevOps. They used automation and continuous delivery to innovate faster and improve reliability.

- Google used DevOps ideas through its Site Reliability Engineering (SRE) It brings software engineering and operations together to keep services reliable.

- Amazon famously used DevOps to make its operations bigger. They moved from doing big releases to small, constant updates. This change helped Amazon meet huge demand while delivering new features faster.

- Netflix used DevOps to build a strong, scalable system. They used continuous delivery, microservices, and automation. They even made a tool called Chaos Monkey to test their system's strength, which became a famous part of their DevOps strategy.

These success stories from big companies made more businesses adopt DevOps. They showed that DevOps isn't just possible, it's necessary for modern software development.

Conclusion

In conclusion, we can say that DevOps has changed how development and operations teams work together. It fixed many problems in the old ways of working by bringing automation, continuous delivery, and a culture of shared responsibility. DevOps helps close the gap between these teams. It makes software delivery faster and more reliable. This improves both speed and quality.

As we look to the future, trends like AI, GitOps, and DevSecOps will make DevOps even better. These trends will help DevOps deal with the increasing complexity of modern software systems.

DevOps - Architecture

In todays fast-moving world of software development, we see that combining development and operations through DevOps architecture is very important for organizations. This method helps us work together better, makes processes simpler, and lets us deliver high-quality software quickly.

In this chapter, we will look at the basic parts of DevOps architecture. We will discuss its main principles, key parts, and the tools that help us use it. We will talk about how DevOps architecture allows practices like continuous integration and continuous delivery (CI/CD), infrastructure as code (IaC), and monitoring. All these help us create a more flexible and quick development environment.

What is DevOps Architecture?

DevOps architecture is a framework that mixes development and operations practices. It helps us create a smoother, more cooperative, and efficient way to deliver software. We focus on breaking down barriers between teams. We integrate different steps of the software development lifecycle (SDLC). This helps improve communication, automation, and constant improvement.

By using ideas from Agile and Lean methods, the DevOps architecture lets us react quickly to what the market needs. We can deliver new features faster and keep our software quality high.

Key Components of DevOps Architecture

Following are the key components of DevOps Architecture −

- Continuous Integration (CI) − CI is a practice where we automatically combine code changes from many contributors into a shared place several times a day. This process helps us find and fix problems early. It makes our software better.

- Continuous Delivery (CD) − CD builds on CI. It makes sure that the combined code is always ready to be used. This way, we can release new features and fixes quickly and reliably. It helps us get fast feedback and improve.

- Infrastructure as Code (IaC) − IaC is about managing and setting up our infrastructure through code. We dont do it by hand. This way, we can set up our infrastructure automatically, consistently, and repeatedly. It reduces mistakes and speeds up the process.

- Microservices Architecture − This design means breaking our applications into smaller services. These services are not tightly connected. We can develop, deploy, and scale them on their own. Microservices give us more flexibility. They let us work on different parts without stopping the whole system.

- Automation Tools − Automation is very important in DevOps architecture. We use tools like Jenkins, Ansible, and Terraform to automate tasks. These include testing, deployment, and managing setups. This lets our teams focus on more important work.

- Monitoring and Logging − We need to monitor our applications and infrastructure all the time. This is key to keeping our performance and reliability. Monitoring tools gather data and metrics. Logging tools collect detailed event information. This helps us find and fix issues quickly.

- Collaboration and Communication − Good teamwork between development and operations is key for DevOps architecture to work well. We use tools like Slack, Jira, and Confluence to help us communicate and manage projects. This way, everyone stays aligned and informed during the development process.

- Security (DevSecOps) − We add security into the DevOps process through DevSecOps. This makes sure security steps are part of every stage of development. This way, we can find problems early and stay compliant. We do this without slowing down our delivery.

Core Principles of DevOps Architecture

The core principles of DevOps architecture are very important for creating a team-focused and effective software development environment. These principles help to enhance teamwork and make processes easier. They also support continuous delivery and good monitoring with feedback.

Following these principles allows organizations to make their development workflows smoother. They can also improve software quality and respond faster to market demands. Here is a summary of the key principles of DevOps architecture −

| Principle | Description |

|---|---|

| Collaboration | Encourages open talks and teamwork. Development, operations, and stakeholders work together. This helps to share responsibility for quality and delivery. |

| Automation | Makes repeated tasks easier. Automation of code integration, testing, and deployment reduces mistakes. This increases overall efficiency. |

| CI / CD | Enables fast and safe software delivery. This is done by merging code changes into a central place often and automatically putting tested code into production. |

| Monitoring and Feedback Loops | Involves continuous monitoring of applications and infrastructure. This gives real-time information. Learning from failures and successes helps to improve all the time. |

DevOps Example Step By Step

To show how a typical DevOps workflow works, let's look at an example of an e-commerce app. This app wants to add a new feature. It wants to create a recommendation system for products. Here is how the DevOps process happens:

Step 1: Planning and Requirements Gathering

First, the development team works with stakeholders. They gather the needs for the recommendation system. They talk about what users expect, technical needs, and goals. This step is important to set up the next development work.

Step 2: Designing the Architecture

After gathering the needs, the team designs the architecture for the new feature. They choose a microservices architecture. This way, the recommendation system can run on its own and not depend on the main app. The design also includes how to store data and connect with current services.

Step 3: Development

Next, developers start coding the recommendation system. They break down tasks and assign them to different team members. They use version control systems like Git to manage code changes together. Each developer works on separate branches. This allows them to work at the same time without issues.

Step 4: Continuous Integration (CI)

When developers commit their code changes to the repository, a CI tool like Jenkins starts builds automatically. The CI process runs tests to check that the new code works well with the old code. If any tests fail, developers get alerts right away to fix the problems.

Step 5: Continuous Delivery (CD)

After the code passes all tests, it goes to the Continuous Delivery pipeline. This pipeline automates the deployment to staging environments for more testing. In this environment, more automated tests run. These include integration and performance tests to see how the feature works in different situations.

Step 6: Deployment

Once testing is successful in the staging environment, the recommendation system is ready to go live. Using tools like Ansible or Terraform, the team deploys the new feature to production. They watch the deployment closely to make sure it does not disturb existing services.

Step 7: Monitoring and Feedback

After the recommendation system is live, monitoring tools track its performance and user engagement. They collect metrics like response time, system load, and user interactions. Feedback from users helps check how effective the feature is and find ways to improve it.

Step 8: Continuous Improvement

Using the metrics and user feedback, the development team finds ways to make things better. They might see that the recommendation algorithms need improving or that new features could help user experience. This starts new planning sessions, and the cycle of development begins again. This keeps the app growing to meet user needs.

DevOps Toolchain

In our DevOps toolchain, we have many tools that help in different stages of software development. These tools help us work together, automate tasks, and be more efficient. This allows us to deliver good software quickly and reliably. When we use these tools together, we create a smooth workflow.

The following table provides a summary of the different types of tools we commonly use in DevOps −

| Tool Category | Tools | Description |

|---|---|---|

| Planning and Collaboration Tools | Jira | This tool helps us plan, track, and manage agile software projects. It has features for task assignments and tracking progress. |

| Trello | Trello is a visual tool. It uses boards, lists, and cards to help us organize tasks and projects. It makes it easy for teams to manage their work and priorities. | |

| Asana | Asana helps us create, assign, and track tasks. It makes communication better and shows how projects are going. | |

| Version Control | Git | Git tracks code changes. It allows many developers to work together on projects while keeping version history. |

| Bitbucket | Bitbucket is a web-based repository for version control. It supports Git and Mercurial. It has features for pull requests, code reviews, and integration with CI/CD tools. | |

| GitHub | GitHub is a platform for version control using Git. It offers collaborative features like pull requests and issue tracking. Many use it for open-source projects. | |

| CI / CD Tools | Jenkins | Jenkins is an open-source server. It helps us automate building, testing, and deploying applications. We can customize it with pipelines and plugins. |

| Travis CI | Travis CI is a cloud-based service that builds and tests code changes. It integrates directly with GitHub for easy deployment and teamwork. | |

| CircleCI | CircleCI is a platform that automates software testing and deployment. It gives quick feedback on code changes and supports Docker and Kubernetes. | |

| Configuration Management | Puppet | Puppet is an open-source tool. It helps manage and configure servers and applications. It keeps things consistent across environments. |

| Chef | Chef is a configuration management tool. It uses code to automate setting up and managing infrastructure. This helps us repeat and scale deployments easily. | |

| Ansible | Ansible is an open-source tool that makes configuration management easy. It uses a simple YAML language to help us update applications quickly. | |

| Monitoring and Logging | Prometheus | Prometheus is an open-source monitoring tool. It collects metrics and provides powerful ways to query data. It is built for reliability and scalability. |

| ELK Stack | The ELK Stack combines Elasticsearch, Logstash, and Kibana. It helps us with centralized logging and data analysis. We can visualize application health with it. | |

| Grafana | Grafana is an open-source platform for analytics and monitoring. It connects with different data sources. This allows us to create dashboards to see application metrics. |

Conclusion

In this chapter, we looked at its basic ideas, key parts, and how it has changed over time. We started by defining DevOps and why it is important today. We talked about core ideas like working together, automating tasks, and continuous integration and continuous deployment (CI/CD). These ideas help us use DevOps well. We also checked out the tools in the DevOps toolchain.

DevOps - Lifecycle

With a good DevOps strategy, we can improve our work, launch products faster, and make sure software is more reliable. In this chapter, we will go through the DevOps Lifecycle. We'll explain the stages like planning, development, deployment, and operations. We'll also see how getting feedback helps us improve every stage. Along the way, we'll share best practices for making the DevOps lifecycle work even better.

What is DevOps Lifecycle?

The DevOps Lifecycle is the ongoing process where we bring together development and operations work across the whole software delivery pipeline. It has stages like planning, development, deployment, operations, and feedback. These stages connect with each other. This helps us work better as a team, automate tasks, and make releases faster and more reliable.

Phases of DevOps Lifecycle

Having a clear DevOps lifecycle helps us deliver software smoothly and in a way that can grow with the needs. Following are the key phases of the DevOps Lifecycle −

- Planning and Design − We define the needs and plan the solution.

- Development − This is where we code, control versions, and run automated tests.

- Deployment − We use continuous integration and delivery. We also handle infrastructure as code.

- Operations − Here, we monitor, manage issues, and improve performance.

- Feedback − We make things better with regular feedback from metrics and users.

Phase 1: Planning and Design

The Planning and Design stage is the main base for good DevOps setup. It means understanding business goals, defining system needs, and designing infrastructure. Also, choosing right tools is important. This step makes sure our DevOps pipeline fits project's tech and operational needs.

Requirements Gathering

In this step, teams collect and write both functional and non-functional needs −

- Business requirements − What are the main goals?

- Technical requirements − What features or system links are needed?

- Security and compliance − What rules or security checks should we follow?

Solution Architecture

Here, we plan how the app will be built. Focus is on scaling and strength −

- Break the app into microservices or small parts.

- Plan how data moves between services and other systems.

- Find third-party services or APIs to link.

Infrastructure Design

This step shows how we will set up and manage the environment −

- Cloud vs on-premise − We choose where infrastructure will be (like AWS, Azure, or on-premise).

- Scalability − Make sure the system can grow with workload.

- Network design − Plan the network, security checks, and load balancing.

DevOps Tool Selection

Choosing right tools is key for making processes faster and simpler −

- Version control − Use tools like Git or SVN for code managing.

- CI/CD tools − Jenkins, CircleCI, or Travis CI help us with continuous integration and delivery.

- Infrastructure as Code − Tools like Terraform or Ansible automate environment setup and management.

Phase 2: Development

The Development phase is about writing, managing, and testing code in a simple and team-friendly way. We focus on automating stuff and catching problems early. This helps us deliver good code fast.

Code Development

Code development is writing and improving the app code based on the system plan −

- Break the tasks into smaller parts called sprints. It helps us finish things faster.

- Teams work together. We do code reviews and pair programming to keep code quality good.

- We follow coding rules and try to write code that can be used again.

Version Control

Version control systems help us track changes and work together without problems −

- Git, SVN − These are common tools to manage code versions.

- Branching strategies − We use methods like GitFlow or feature branching to separate different work.

- Collaboration − Many developers can work on the same project without creating issues.

Continuous Integration

Continuous integration (CI) makes sure code from different developers is added into one shared place −

- Automated builds − Tools like Jenkins, Travis CI, or CircleCI build the code automatically.

- Frequent integration − Developers add their code often to find issues early.

- Build verification − We make sure new code does not break the existing system.

Automated Testing

Automated testing helps us check if the code works well and reduces bugs −

- Unit Tests − We test small parts of the app one by one.

- Integration tests − We make sure different parts of the app work together fine.

- Test automation tools − Tools like Selenium, JUnit, or PyTest help us test things automatically.

Phase 3: Deployment

The Deployment stage is where we move code from development to the production environment. This stage is very important in the DevOps lifecycle. We use different tools and methods to make sure the code gets to users quickly and without many issues.

Continuous Delivery

In continuous delivery, we make sure code is always ready to go live. We test it automatically. After every change, we can deploy the code.

Our main goal is to deploy the code anytime. We can release changes to users more often. This helps us catch bugs early.

Infrastructure as Code

Infrastructure as Code (IaC) means we manage and set up infrastructure using code instead of doing it by hand. It makes it easy to create and manage servers, databases, and networks.

We write scripts to set up everything like servers and storage. We use tools like Terraform or Ansible to automate tasks. This saves us time and prevents mistakes.

Configuration Management

Configuration management helps us make sure all systems are set up the same way everywhere. It also keeps systems consistent over time.

We track all changes made to servers or systems. Tools like Chef, Puppet, and Ansible help us manage settings. This makes it easy to fix problems because we know what changed.

Deployment Pipelines

A deployment pipeline is a step-by-step process that code follows from development to production. It includes all stages like building, testing, and deploying.

We automate each stage to reduce the manual work. Developers push code to the pipeline, and the system does the rest. Pipelines help us deploy quickly and without errors.

Phase 4: Operations

In the Operations phase, we focus on keeping the application running smoothly. We monitor our systems and manage incidents. Our goal is to make sure everything works well and efficiently.

Monitoring and Logging

Monitoring and logging help us watch how the system performs. We track important metrics and logs to find issues quickly.

We use tools to check server health and performance. We collect logs to see what happens in the system. This helps us find problems before they affect users.

Incident Management

When something goes wrong, we need to handle it fast. Incident management is about fixing issues quickly and reducing downtime.

We have a process to report and respond to incidents. Team members know their jobs when an incident happens. We learn from each incident to prevent it in the future.

Capacity Planning

Capacity planning helps us decide how many resources we need for our applications. We make sure we have enough servers and storage for users.

We look at past usage to predict future needs. We adjust our resources to avoid slowdowns or crashes. This way, we can give a good experience for users.

Performance Optimization

Performance optimization is about making our applications run faster and better. We find ways to improve speed and efficiency.

We search for bottlenecks in the system and fix them. We test different setups to see what works best. Our goal is to keep users happy with fast response times.

Phase 5: Feedback and Improvement

In the Feedback and Improvement phase, we focus on learning from our processes and making them better. We want to improve our work and results by getting constant feedback and doing regular checks.

Continuous Feedback Loops

Continuous feedback loops help us gather input from users and team members regularly.

We ask for feedback after each deployment or big change. User insights help us see what works and what doesn't. We change our processes based on this feedback for better results.

Retrospective Meetings

Retrospective meetings are important for thinking about our work. We hold these meetings after each sprint or project. Team members share what went well and what we can improve. We create action items to fix issues in the next cycle.

Data-Driven Decision Making

Data-driven decision-making helps us make choices based on facts and numbers. We look at performance data to guide our actions. This way, we reduce guesswork and get better outcomes. We track key performance indicators (KPIs) to measure our success.

Iterative Development

Iterative development lets us improve our products bit by bit. We work in small cycles to release updates more often. Each cycle builds on the last one, improving features. This method helps us respond quickly to changes and user needs.

Conclusion

In this chapter, we looked at the key parts of the DevOps lifecycle. This includes planning, development, deployment, operations, feedback, and improvement. We talked about the importance of practices like automation, collaboration, and data-driven decision-making. These practices help us streamline processes and improve product quality.

When we use these practices, our teams can work better. We can respond fast to user needs and keep improving our workflows. Embracing the DevOps lifecycle helps us deliver high-quality software faster. This gives more value to users and makes our development process more successful.

DevOps - Tools

In this chapter, we will look at the different DevOps tools that teams use in various stages of the DevOps lifecycle. We will explore tools for version control, continuous integration, continuous delivery, infrastructure as code, and configuration management. We will also cover tools for monitoring, containerization, and cloud platforms. We will see what these tools do, how they help, and how they are set up to make the DevOps process better.

By the end of this chapter, you will have a good idea of the main tools that power DevOps and how they help in the success of software projects.

What are DevOps Tools?

DevOps tools are software that help us automate and manage different parts of the DevOps lifecycle. These tools let development and operations teams work together better. They help speed up how we deliver apps and make sure we keep the quality high. DevOps tools do many things. They help with version control, continuous integration, deployment, monitoring, and managing infrastructure.

DevOps tools cover everything from planning and development to deployment and monitoring. These tools make it easier to communicate and work together between teams. They automate tasks we do often, which lowers mistakes and saves time.

The Importance of DevOps Tools

DevOps tools are important because they make software development and delivery faster and more reliable. They help us handle complex systems while keeping deployments quick and stable. Without these tools, we would face slowdowns and more mistakes from doing things manually.

DevOps tools help us automate things like testing, deployment, and monitoring. They make it easier for teams to work together. We get continuous feedback, which means we can improve things faster. In addition, DevOps tools help keep our code quality high and reduce downtime when apps are running in production.

Top DevOps Tools

The following table highlights the top DevOps tools and their categories −

| Category of Tools | Tool Name | Features and Benefits | Use Cases |

|---|---|---|---|

| Version Control Systems | Git | Distributed version control Branching and merging support Tracks code changes efficiently |

Source code management Open-source project collaboration |

| SVN | Centralized version control Simple branching Strong security controls |

Centralized projects Teams needing strong admin control over repositories |

|

| Continuous Integration Tools | Jenkins | Open-source CI tool Wide plugin support Automated builds and testing |

Automating build pipelines Continuous integration for large projects |

| CircleCI | Cloud-based CI/CD tool Fast build execution Easy GitHub integration |

Fast, parallel builds Cloud-based projects with frequent deployments |

|

| Travis CI | Cloud-hosted CI/CD tool Pre-built environments GitHub integration |

Automated testing for open-source projects Simple deployment pipelines |

|

| Continuous Delivery Tools | Spinnaker | Multi-cloud continuous delivery Supports advanced deployment strategies |

Managing cloud-native applications Blue/green and canary deployments |

| GoCD | Pipeline as code Strong artifact management Easy rollback to previous versions |

Continuous delivery pipelines Configuring and visualizing complex workflows |

|

| Infrastructure as Code Tools | Terraform | Cloud-agnostic infrastructure Declarative configuration Manages dependencies automatically |

Infrastructure provisioning across multiple cloud providers Automating infrastructure |

| Ansible | Agentless architecture Simple YAML-based configuration Fast deployment of configurations |

Automated configuration management Application deployment and provisioning |

|

| Puppet | Model-driven configuration Centralized control Strong reporting features |

Managing complex infrastructures Automating system administration tasks |

|

| Configuration Management Tools | Chef | Code-driven infrastructure automation Strong testing framework Cloud integrations |

Automating server configuration Continuous deployment in multi-cloud environments |

| Ansible | Simple configuration management Push-based deployment Easy to use for beginners |

Rapid configuration of servers Ideal for lightweight automation |

|

| Puppet | Manages configurations in large environments Node-based reporting Scalable infrastructure |

Automating infrastructure at scale Continuous configuration management |

|

| Monitoring and Logging Tools | Prometheus | Time-series data monitoring Customizable alerting Strong Kubernetes support |

Monitoring cloud-native applications Tracking performance metrics and uptime |

| Grafana | Visualizes metrics Supports multiple data sources Customizable dashboards |

Real-time monitoring dashboards Graphing metrics for system performance |

|

| ELK Stack | Centralized logging Search and visualize log data Scalable log storage and processing |

Managing logs from large-scale applications Centralized log analysis and troubleshooting |

|

| Containerization Tools | Docker | Lightweight containers Easy container orchestration Portable across environments |

Running isolated apps in containers Building and shipping applications consistently |

| Kubernetes | Automated container orchestration Manages scaling and load balancing Self-healing features |

Orchestrating containers across clusters Scaling applications efficiently |

|

| Cloud Platforms | AWS | Extensive cloud services Global infrastructure Strong security features |

Hosting scalable web apps Data storage and processing |

| Azure | Hybrid cloud solutions Enterprise-grade security Integration with Microsoft products |

Hosting enterprise apps Hybrid cloud environments |

|

| GCP | Strong machine learning tools High-performance computing Cost-effective solutions |

Big data analytics Cloud-native app development |

Conclusion

In this chapter, we looked at different DevOps tools in important areas like version control, continuous integration, continuous delivery, infrastructure as code, configuration management, monitoring, and cloud platforms.

We talked about tools like Git, Jenkins, Terraform, Docker, and AWS. We explained their features, benefits, and how we can use them. These tools help us automate tasks, work together better, and make our teams more efficient. When we use these tools, it makes our workflows simpler, increases productivity, and helps deliver software faster and more reliably.

FAQs on DevOps Tools

In this section, we have collected a set of FAQs on DevOps Tools followed by their answers –

1. Which DevOps tool is best?

The "best" DevOps tool depends on what we need for our project. Some of the common ones are Jenkins for CI/CD, Git for version control, Docker for containers, Kubernetes for managing containers, and Ansible for configuration. When choosing, we should look at features, how well it scales, support from the community, and how it works with our current tools.

2. Is Jira a DevOps tool?

Jira is mostly used for project management and tracking issues. But we can connect it with other DevOps tools to help manage the full development process. While it's not exactly a core DevOps tool, it still helps us in planning, tracking, and coordinating the tasks in a DevOps setup.

3. Is DevOps a tool?

No, DevOps is not a single tool. It's more like a set of ideas and methods that help dev and ops teams work better together. It's about using different tools and technology to automate tasks and make the software development and delivery faster.

4. What is Jenkins used for?

Jenkins is mostly used for CI/CD. It helps automate the process of building, testing, and deploying software. We can connect it with version control systems, testing tools, and environments for deployment. This helps in setting up a smooth continuous delivery pipeline.

5. Is Docker a CI/CD tool?

Docker by itself is not a CI/CD tool, but it is a very important part of CI/CD pipelines. It helps us package apps and their dependencies in containers. This makes sure the environment is the same across development, testing, and production. CI/CD tools like Jenkins use Docker to build, test, and deploy apps that run in containers.

DevOps - Automation

In this chapter, we will look at the different sides of automation in DevOps. We'll start by explaining what automation means in DevOps. Then, we’ll talk about different types of automation and how they help us. We will cover topics like infrastructure automation, managing configurations, and continuous integration. Testing and monitoring automation will also be part of this.

In addition, we'll share some best practices to adopt automation, discuss the challenges we face, and consider security to keep the environment safe.

What is Automation in DevOps?

Automation in DevOps is the key part of modern software development. It helps us speed up our workflows, reduce mistakes, and make our systems more efficient. By automating tasks like code deployment, managing infrastructure, and testing, we can focus on more important things like improving the product and solving bigger problems.

DevOps automation lets us deliver software faster and with better quality. It also keeps things consistent and reliable in production.

Types of Automation

Automation in DevOps can be divided into several key types. Each type has its purpose and helps make our workflows smoother. By knowing these types, we can set up good automation strategies that fit our needs.

Infrastructure Automation

Infrastructure automation means we automate the setup, configuration, and management of servers, networks, and other parts of infrastructure. This helps us deploy resources quickly and in a consistent way.

- Configuration Management − Configuration management makes sure all systems in our infrastructure are set up correctly and stay that way over time. We use tools like Ansible, Puppet, and Chef for this.

- Infrastructure as Code (IaC) − IaC lets us manage infrastructure with code. We can version it and deploy it just like application code. This gives us more speed and flexibility. Here, we commonly use tools like Terraform and AWS CloudFormation.

Deployment Automation

Deployment automation makes the process of deploying applications to different environments easier. This helps us release faster and more reliably.

- Continuous Integration (CI) − Continuous Integration is when we automatically test and merge code changes into a shared repository. CI tools like Jenkins, Travis CI, and GitLab CI help with this.

- Continuous Delivery (CD) − Continuous Delivery builds on CI. It makes sure that code changes are ready for release to production. This helps us deliver new features faster.

Testing Automation

Testing automation helps us make the testing process more efficient and effective. This allows us to get feedback faster and improve software quality.

- Unit Testing − Unit testing checks individual parts of the application on their own. This makes sure each part works as it should.

- Integration Testing − Integration testing makes sure different modules or services work together correctly. This is important for spotting issues in how components interact.

- End-to-End Testing − End-to-end testing checks the whole application from start to finish. This makes sure the system works as expected in real-world situations. Key points include:

Monitoring and Logging Automation

Monitoring and logging automation helps us keep an eye on systems for performance and reliability. This lets us resolve issues before they get serious.

- Alerting − Alerting systems notify teams about problems or failures right away. This helps us respond quickly to potential issues.

- Analytics − Analytics automation involves gathering and analyzing data from applications and infrastructure. This gives us insights for better decisions.

Challenges and Best Practices of DevOps Automation

The following table highlights the challenges and best practices in DevOps automation −

| Category | Description |

|---|---|

| Best Practices for Automation | Start Small − We should begin with low-risk tasks. This helps us build confidence and get quick wins. |

| Incremental Adoption − We can gradually increase our automation efforts. This avoids overwhelming teams and makes the transition smoother. | |

| Measure and Improve − We need to keep an eye on automated processes. Collect feedback and use metrics to make our workflows better. | |

| Ensure Collaboration − We must encourage communication between development and operations teams. This helps align goals and share insights. | |

| Maintain Documentation − We should keep clear documentation of our automated processes. This makes it easier to understand and onboard new team members. | |

| Challenges and Considerations | Complexity − Automation can make things complicated. We need to manage it well and have clear documentation to avoid confusion. |

| Cost − The initial costs for automation tools and training can be high. We need to think about cost and benefits. | |

| Skills Gap − Teams might need new skills or training to use and manage automation tools well. | |

| Resistance to Change − Some team members may not want to adopt automation. They may fear losing their jobs or changing their workflows. | |

| Security Concerns − If we automate processes without proper security, we can make our systems vulnerable. |

Conclusion

In this chapter, we looked at the important role of automation in DevOps. We highlighted different types like infrastructure, deployment, testing, and monitoring automation. We talked about best practices for successful use. Starting small and encouraging teamwork are some of these practices. We also discussed challenges we face, like complexity and security concerns.

By using automation well, we can improve our productivity. We can make fewer mistakes and deliver high-quality software faster. This helps organizations respond quickly to market needs and stay competitive in a fast-changing tech world.

DevOps - Workflow

Read this chapter to learn about the DevOps Workflow. Here you will learn why it is important to have a clear workflow. We will also look at each step, from planning and coding to deployment and operations.

By the end of this chapter, you will understand how to create a DevOps workflow that improves how your team works together and boosts efficiency.

Step-by-Step DevOps Workflow

The first step in any DevOps workflow is planning and design. We need a strong base to make sure everything goes smoothly in development, testing, and deployment. For an e-commerce website, this part sets the path for the whole project.

Step 1: Define Project Goals and Objectives

We start by setting clear goals for the e-commerce platform. For example, give customers a smooth shopping experience, make sure it can handle high traffic during big promotions.

Step 2: Gather and Analyze Requirements

Next, we gather both business and technical needs:

- Business needs − Easy-to-use interface, safe payment methods, and fast checkout.

- Technical needs − Support different payment methods, link with inventory systems, and make sure it's mobile-friendly.

Step 3: Design the Solution Architecture

We design an architecture that's scalable and secure:

- Frontend − A web app using frameworks like React or Angular.

- Backend − Microservices built with Node.js, Python, or Java.

- Database − Choose SQL (like PostgreSQL) or NoSQL (like MongoDB).

- Cloud Infrastructure − Use AWS, GCP, or Azure for hosting and scaling.

Step 4: Select Appropriate DevOps Tools

We choose tools that match the project needs:

- Version Control − Use GitHub for managing code.

- CI/CD − Jenkins for setting up continuous integration and delivery.

- Infrastructure as Code (IaC) − Terraform to automatically manage cloud resources.

In the development phase of DevOps, we focus on writing and integrating code. This stage is important for making sure features like product catalog, shopping cart, and payment systems in an e-commerce website are developed smoothly. We use automation to reduce mistakes and make things faster.

Step 5: Develop Code Using Version Control

We use version control systems (VCS) like Git to manage code when working with a team. On the e-commerce platform, developers might work on different things like checkout process or product listing. Git lets them work together without messing up each other's changes.

- Branching − Developers make separate branches for each feature (like checkout-feature or search-bar-update). This way, they can work independently without breaking the main code.

- Merging − Once the feature is done and tested, we merge it back into the main

Step 6: Implement Continuous Integration Practices

Continuous Integration (CI) helps us keep the code clean and prevent big issues when merging code. Tools like Jenkins or CircleCI automatically build and test the code when developers push changes.

For example, when a new feature like "add-to-cart" is developed, CI makes sure it fits with the rest of the code without causing problems.

Step 7: Write Automated Tests

We write automated tests to catch bugs early and ensure our code works well. In an ecommerce site, tests might check things like product searches, adding items to the cart, or completing a purchase.

- Unit Tests − Test small parts of the code, like making sure the payment module calculates taxes right.

- Integration Tests − Check if different parts (like login and checkout) work well together.

- End-to-End (E2E) Tests − Simulate what users would do, like selecting a product, adding it to the cart, and checking out.

Step 8: Conduct Code Reviews

Code reviews help improve the quality of the code. Before we merge a new feature, like a product recommendation engine, a senior developer or peer checks the code to catch problems and ensure it follows best practices.

In DevOps workflow, the testing phase makes sure the platform is stable and works well. We focus on testing different parts of the e-commerce site to be sure it runs smooth and gives a good shopping experience to users.

Step 9: Execute Unit Tests

Unit tests check small pieces of code, like a function or component. In an e-commerce website, this might mean checking things like how it calculates total price or checks discount codes.

Make sure each part of the platform works as it should, by itself. For example, test the function that figures out shipping cost based on where the customer lives.

Step 10: Perform Integration Testing

Integration testing checks if different modules work well together. In an e-commerce site, this could be making sure the payment gateway and checkout process work fine, and that the inventory gets updated after someone buys something.

Ensure that different services (like payment and order management) work together without any problem. For example, test if inventory count goes down after an item is bought and the payment is done.

Step 11: Conduct System Testing

System testing checks the whole platform, not just parts of it. We test to see if everything works as it should in a real-world situation.

Test the complete system to make sure all features are working fine together. For example, simulate a user browsing the site, adding things to the cart, and buying them, checking for things like slow loading or payment errors.

Step 12: Conduct Acceptance Testing

Acceptance testing checks if the platform meets business needs and works for users. This is usually the last stage before the site goes live.

Make sure the system meets the goals of the business. For example, test if users can search for products, add them to the cart, and checkout without issues. We want to make sure the experience is good for users.

In the deployment phase, we get our e-commerce website ready for customers. This involves setting up resources, deploying the application, and making sure everything runs well. Let's break down the key steps.

Step 13: Use Infrastructure as Code to Provision Resources

We use infrastructure as code (IaC) to set up the servers and other resources we need for our e-commerce site. This way makes it easy to create and manage infrastructure without doing it by hand.

IaC helps us set up resources quickly and in the same way. We can easily copy our setup in different places, like development and production. We can use tools like Terraform or Ansible to define our infrastructure in code.

Step 14: Configure Environments

Next, we set up different environments for our e-commerce website. Each environment has a different job.

- Development − This is where we build and test new features.

- Staging − Here, we test the site with all the features together, just like it will be in production.

- Production − This is the live site where customers shop.

Configuring these environments well makes sure everything works as it should before going live.

Step 15: Deploy Applications

Once the environments are ready, we deploy our e-commerce application. This step moves the code and features to the production environment so users can use them.

We upload the code, set up databases, and check that all services are running. For example, when we launch a new payment gateway, we deploy it so customers can use it right away.

Step 16: Implement Continuous Delivery Pipelines

We use continuous delivery (CD) pipelines to automate the deployment process. This helps us release new features faster and with less risk.

Whenever we change the code, the CD pipeline automatically tests and deploys those changes. It means we can release updates for the e-commerce site, like new product features or bug fixes, quickly and reliably.

In the operations phase, we focus on keeping the e-commerce website running well. This means we monitor performance, manage issues, and make sure everything is secure and follows the rules.

Step 17: Monitor Application and Infrastructure Performance

We need to watch how well the e-commerce site is performing. This includes checking both the application and the infrastructure. Tools like Prometheus and Grafana help us track performance metrics, such as page load times, server health, and user activity. By monitoring these metrics, we can see any problems before they affect customers.

Step 18: Manage Incidents and Troubleshoot Issues

Even with our best efforts, issues can still happen. When they do, we must manage incidents quickly. For example, if users have trouble checking out, we need to act fast to find out what is wrong and fix it.

Step 19: Optimize Resource Utilization

We want to make the best use of our resources. This includes servers, databases, and storage. To optimize resource utilization, we:

- Scale Resources − We add or remove servers based on how much traffic we have. For example, during a sale, we may need more servers to handle the extra traffic.

- Review Costs − We check if we are spending too much on resources and look for ways to save.

This way, we keep the site running well without wasting money.

Step 20: Ensure Security and Compliance

Keeping the e-commerce site safe is very important. We must protect customer data and follow the rules. Here's how we do it:

- Regular Security Audits − We do audits to find and fix any weak points.

- Data Encryption − We use encryption to keep user data safe when they make transactions.

- Compliance Checks − We ensure the site meets rules like GDPR for protecting user data.

In the feedback and improvement phase, we focus on making our e-commerce website better based on what users say and the data we see. This helps us make sure we meet customer needs and always improve our processes.

Step 21: Gather Feedback from Stakeholders

We ask for feedback from everyone involved with the e-commerce site, including customers, team members, and business partners.

Step 22: Iterate on the Process Based on Feedback and Insights

We take feedback and data analysis and use them to change our processes. This means we make updates and improvements based on what we learn.

We often update our features, fix bugs, and make user experience better. For example, if the feedback shows that mobile users find it hard to navigate the site, we might redesign the mobile layout to make it easier to use.

Conclusion

In this chapter, we looked at the main phases of a DevOps workflow. These phases are planning and design, development, testing, deployment, operations, and feedback and improvement. We discussed all of this in the context of an e-commerce website.

DevOps - Pipeline

In this chapter, we will explain what DevOps pipelines are and why they are important. We will also look at the main parts of a DevOps pipeline. These parts include source code management, continuous integration (CI), continuous delivery (CD), deployment strategies, and feedback from monitoring. We will help you set up your DevOps pipeline.

What are DevOps Pipelines?

DevOps pipelines are processes that run automatically. They help us with continuous integration and continuous delivery (CI/CD) of software applications. These pipelines have a series of steps that code changes go through. This starts from the first development and goes to deployment in a production environment.

The main goal of a DevOps pipeline is to make the software development lifecycle smoother. This helps us deliver high-quality software faster and more reliably.

Key Components of a DevOps Pipeline

A DevOps pipeline has several key parts that work together. They help us make the software development and deployment process easier. Each part is important to make the pipeline work well and quickly.

Source Code Management

Source Code Management (SCM) systems help us track and manage changes to our code. They make sure we have version control and allow collaboration among developers.

Tools like Git, Subversion, Mercurial are used in source code management. The key functions include version control, branching and merging strategies, collaboration and code reviews

Continuous Integration (CI)

Continuous Integration automates how we add code changes into a shared place. It helps us ensure that new code merges smoothly and gets tested before we go to the next steps.

Continuous Delivery (CD)

Continuous Delivery builds on CI by automating how we deliver applications to testing and production environments. It makes sure that the code is always ready to be deployed.

Deployment

Deployment is when we move the application to a production environment. This is where endusers can use it. Good deployment strategies help us reduce downtime and keep things reliable.

Monitoring and Feedback

Monitoring tools help us keep an eye on application performance, user actions, and system health. Feedback is very important for finding problems early and keeping software quality high.

The key aspects of Monitoring and Feedback include real-time performance monitoring like latency and error rates, logging and alerting systems to catch issues early, and user feedback channels to collect ideas for future improvements.

How to Set Up a DevOps Pipeline?

Setting up a DevOps pipeline has several steps. These steps help us automate the software development lifecycle. Below is a guide to creating a DevOps pipeline with code examples.

Step 1: Set Up Your DevOps Environment

First, we need to choose our tools and technologies. Some popular choices for source code management are Git. For continuous integration and delivery, we can use Jenkins or Travis CI. For containerization, we often use Docker, and for orchestration, we choose Kubernetes.

After choosing our tools, we need to install the software on our local machine or server. To install Docker, we run −

sudo apt-get install docker-ce docker-ce-cli containerd.io

To install Jenkins on Ubuntu, we can use these commands −

sudo apt-get update sudo apt-get install openjdk-11-jre wget -q -O - https://pkg.jenkins.io/debian/jenkins.io.key | sudo apt-key add - echo deb http://pkg.jenkins.io/debian-stable binary/ | sudo tee /etc/apt/sources.list.d/jenkins.list sudo apt-get update sudo apt-get install jenkins

Step 2: Create a Source Code Repository

Next, we create a source code repository. We start by making a Git repository for our project. We can do this by making a new directory and running git init. Heres how −

mkdir my-app cd my-app git init

It is also important to use good branching strategies. We can create branches for features, bug fixes, and releases with commands like git checkout -b feature/my-new-feature.

Step 3: Implement Continuous Integration

After setting up source code management, we implement continuous integration (CI). We start by configuring CI tools like Jenkins. We create a new Jenkins job for our project and connect it to our Git repository.

Next, we write build scripts to automate the build process. We might create a script called build.sh that has commands to build our application. Here's a simple example −

#!/bin/bash echo "Building the project..." # Add commands to build your application

We also need to add automated tests into our CI process to run unit and integration tests with each code commit. A Jenkins pipeline configuration could look like this −

pipeline {

agent any

stages {

stage('Build') {

steps {

sh './build.sh'

}

}

stage('Test') {

steps {

sh './run_tests.sh'

}

}

}

}

Step 4: Implement Continuous Delivery

After continuous integration, we implement continuous delivery (CD). We set up deployment pipelines in Jenkins or other CI/CD tools to automate the deployment. For example, we can set up a Jenkins pipeline like this −

pipeline {

agent any

stages {

stage('Deploy to Staging') {

steps {

sh './deploy_to_staging.sh'

}

}

stage('Deploy to Production') {

steps {

input 'Approve Production Deployment?'

sh './deploy_to_production.sh'

}

}

}

}

We should also manage environment configurations well. We can use configuration files to handle different environments like development, staging, and production.

Step 5: Containerization and Orchestration

Containerization is a key step in a DevOps pipeline. We can use Docker to create a Dockerfile that defines our application’s environment. Here's a simple example of a Dockerfile −

FROM node:14 WORKDIR /app COPY package*.json ./ RUN npm install COPY . . CMD ["npm", "start"]

After we create our Docker image, we manage our containers with Kubernetes. We can create a deployment manifest in YAML format, like deployment.yaml −

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app

spec:

replicas: 2

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-app

image: my-app:latest

ports:

- containerPort: 3000

Step 6: Automate Deployment

For the deployment phase, we need to use good strategies like blue-green deployments, rolling updates, or canary releases based on our application needs. We can use Infrastructure as Code (IaC) tools like Terraform or Ansible to automate infrastructure. A simple Terraform script might look like this −

provider "aws" {

region = "us-west-2"

}

resource "aws_instance" "app" {

ami = "ami-0c55b159cbfafe1f0"

instance_type = "t2.micro"

}

Step 7: Monitoring and Logging

Lastly, we need to set up monitoring and logging to keep our applications healthy. We can use tools like Prometheus and Grafana to watch application performance and system health. For logging, we can use the ELK Stack, which has Elasticsearch, Logstash, and Kibana. A basic Logstash configuration might look like this −

input {

file {

path => "/var/log/my-app/*.log"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["http://localhost:9200"]

index => "my-app-logs-%{+YYYY.MM.dd}"

}

}

Conclusion

Setting up a DevOps pipeline means we choose the right tools. We configure our environments and automate processes for building, testing, and deploying applications. By following these steps and using the code examples, we can create a strong and effective DevOps pipeline for our projects.

FAQs of DevOps Pipeline

In this section, we have collected a set of FAQs on DevOps Pipeline followed by their answers –

1. What is a CI/CD pipeline in DevOps?

A CI/CD pipeline is a series of steps that happen automatically in software development and delivery. We have continuous integration (CI) where code changes get built, tested, and added to a shared repository. Then we have continuous delivery (CD) where the built and tested code gets sent to different environments. CI/CD pipelines help us improve software quality. They make delivery faster and let us release updates often.

2. What is a Jenkins pipeline?

A Jenkins pipeline is a set of steps in a CI/CD pipeline that we run in a specific order. We can define pipelines in Jenkins using a declarative way or scripted way. This gives us the chance to customize and be flexible. Jenkins pipelines can have steps for building, testing, deploying, and monitoring our applications.

3. Is Kubernetes a CI/CD tool?

Kubernetes is not a CI/CD tool by itself. But it is very important in many CI/CD pipelines. Kubernetes is a platform that helps us manage and scale containerized applications. It has features like deployment, scaling, and self-healing. This makes it great for automating how we deploy and manage applications in a CI/CD pipeline.

DevOps - Benefits

Read this chapter to learn why more and more companies are choosing DevOps to solve the issues they face with the traditional Software Development Life Cycle (SDLC). We will talk about how DevOps helps with faster software delivery, better quality, and improved teamwork. We will also see what kind of companies switch to DevOps and the problems it helps fix. Lastly, we will discuss why DevOps is now very important for modern businesses.

What are the Benefits of DevOps?

We see more and more companies switching to DevOps because it helps solve problems that come with the old way of doing things. They want to be faster, work together better, and release their software quicker. In today's fast market, where speed matters, DevOps has become a key strategy for companies.

DevOps helps fix problems like delays, poor communication between teams, and slow reaction times. Plus, it still keeps the software quality and reliability high.

Here are the benefits of moving towards DevOps −

1. Faster Time to Market

DevOps helps teams develop software faster, so companies can release new features, updates, or bug fixes quickly. For example, Amazon releases new code every 11.7 seconds using DevOps.

CI/CD pipelines help by automating builds, tests, and deployments, which cuts down on manual work and speeds up releases.

2. Better Collaboration and Communication

DevOps breaks the walls between development, operations, and testing teams. Everyone shares responsibility. Tools like Slack, Jira, and GitHub help teams communicate in real-time, making work easier and faster. This way, issues get solved faster, and productivity goes up.

3. Better Quality and Reliability

DevOps uses automated testing and monitoring, making sure that software is tested throughout the process. For example, Netflix uses "Chaos Engineering" to test its systems for any failure, improving its system’s reliability. Automation catches bugs early, so fewer problems make it to production.

4. More Frequent Deployments

Old development models only allow a few deployments each year. But DevOps allows multiple deployments daily or weekly, based on needs. Facebook, for example, uses DevOps for continuous deployment to deliver new features quickly. Automated workflows and testing make sure frequent releases are stable.

5. Scalability and Flexibility

DevOps works with cloud services like AWS, Google Cloud, and Azure. These platforms offer flexible infrastructures that grow with company needs. Infrastructure as Code (IaC) helps teams manage their infrastructure through code, which makes scaling easier.

6. Shorter Recovery Time

DevOps helps teams find and fix issues faster, cutting down the Mean Time to Recovery (MTTR). Etsy, for example, reduced its recovery time from an hour to just a few minutes using DevOps.

Monitoring tools like Prometheus and Grafana provide real-time information on system health, making it easier to catch and fix problems.

7. Security Improvements

DevOps introduces DevSecOps, bringing security practices into the development cycle early on. Automated security tests catch vulnerabilities before the software is released. For example, Google adds security checks in their CI/CD pipelines, ensuring all code is secure before production.

8. Cost Efficiency

DevOps cuts costs by automating repetitive tasks and reducing manual work. It also optimizes resources, especially with cloud infrastructure, lowering waste and improving cost management.

In the end, businesses adopt DevOps for faster releases, better teamwork, and more stable systems. With more companies using cloud computing, microservices, and containers, DevOps is now essential for staying ahead in today's tech world.

Challenges in Traditional SDLC

The following table highlights why it can be challenging to following Traditional SDLC methods in the new age of software development −

| Challenge | Description | Example |

|---|---|---|

| Lack of Flexibility | All requirements are fixed early, making changes harder later. | Changes in the middle of the project, like new market needs, are hard to add and cause delays. |